Week 7 and reflections

Recap of final performance

This week was really fun, with lots of changes and lots of learning. The first struggle was trying to find the correct power for the Neo Pixel LED lights with the Arduino, I tried a few different things but it seems I needed a specific barrel connection to be able to go from the jumper-like cables to the Arduino which turned out to be the reason we needed to scrap the audio reactive lights even though we had done a lot of research on the coding end of things, but without the correct power supply, it proved to be very difficult to finish this additional part of the performance so we decided to scrap it.

Another reflection would be the number of laptops and making sure they all work with them worked correctly at the venue proved to be a timely issue even when prepping the laptops ahead of time, one of them would not connect to the network, which ate up a lot of the performance time.

I also noticed that I was not so aware of how much light these projectors emit and think having an even larger space to document the work would be needed or a really nice camera and lots of time, because of the high dynamic range of the dark areas and bright areas, this caused some issues with recording everything cleaning. I think in the future maybe even kept the house lights on so that it looks good on camera rather than just looking good in the room for people watching in real-time.

Lastly, I think the real success of this project was performing live, even after all the practice and feeling as if I have improved greatly with live coding the rush of knowing the performance has started and people are watching and the camera is rolling really makes each of these moments special and something you can never really repeat, they are moments in time.

We look forward to live coding more in the future and see big opportunities to do more room-scale projection, I loved the way you could turn and get different bits of information clearly as the performance carried one, I also loved that there is not one central focus point even though we performed from the center the attention is still very much away from the performers which allow for a level of comfort of not feeling as though eyes are looking directly at you.

We also discovered with the screens across from each other and using the cameras as a real-time in person feedback loop that could be manipulated through code was the biggest unexpected surprise of the performance, makes we want to work with mirrors and different types of reflective material or meshes to see what is possible when creating a space for those to immerse themselves with live coding.

Below are images and moments from our performance.

Snippets of the performance in 360. Use a headset or just mouse around.

// Bharat Code Sample

// written for Sonic Pi in Ruby

use_bpm 134

chords = [(chord :D, :minor7),

(chord :Bb, :major7), (chord :F, :major7),

(chord :C, "7")].ring

c = chords[0]

live_loop :drums do

16.times do

sample :bd_haus

sample :bd_boom

sleep 0.5

sample :drum_cymbal_closed, amp: 0.5

sample :bass_hit_c, amp: 0.6, release: 1

sleep 0.5

end

4.times do

sample :sn_dolf, amp: 0.3

sleep 0.125

end

sample :drum_splash_hard, amp: 0.2

end

live_loop :melody do

cue :drums

with_fx :slicer, amp: 0.4 do

use_synth :fm

16.times do

3.times do

play c[0]

sleep 0.5

end

play c[2]

sleep 0.25

play c[1]

sleep 0.25

c = chords.tick

end

end

end

// Darren Left Laptop Code Sample

// All Codes are for Hydra.xyz

// running on Atom + Live Server

a.show()

s0.initVideo

("insert live server address here")

src(s0).modulate(osc(10),.2).modulate(o0,2)

.mask(shape(4)).modulateRepeat(noise(2),fft0)

.scrollY(2,.2).out()

function setup1(){

fft0 = () => (a.fft[0]*1.2)

fft1 = () => (a.fft[1]*Math)

fft2 = () => (a.fft[3]*3.2)

fft3 = () => (a.fft[6]*1)

render0 = () => render(o0)

render1 = () => render(o1)

render2 = () => render(o2)

render3 = () => render(o3)

return

}

// Darren Center Laptop Code Sample

// written for Hydra.xyz using javascript

s0.initCam(3)

src(s0).out()

// Darren Right Laptop Code Sample

// written for Hydra.xyz using javascript

a.show()

osc(1,.2,30).rotate(fft0,2).mask(shape(3))

.modulate(o0,.2).rotate(30,.1).modulate(o0,2)

.scale(2.2).repeat(4).scrollX(3,.2).out(o0)

BTS Live Code Performance

Week Six

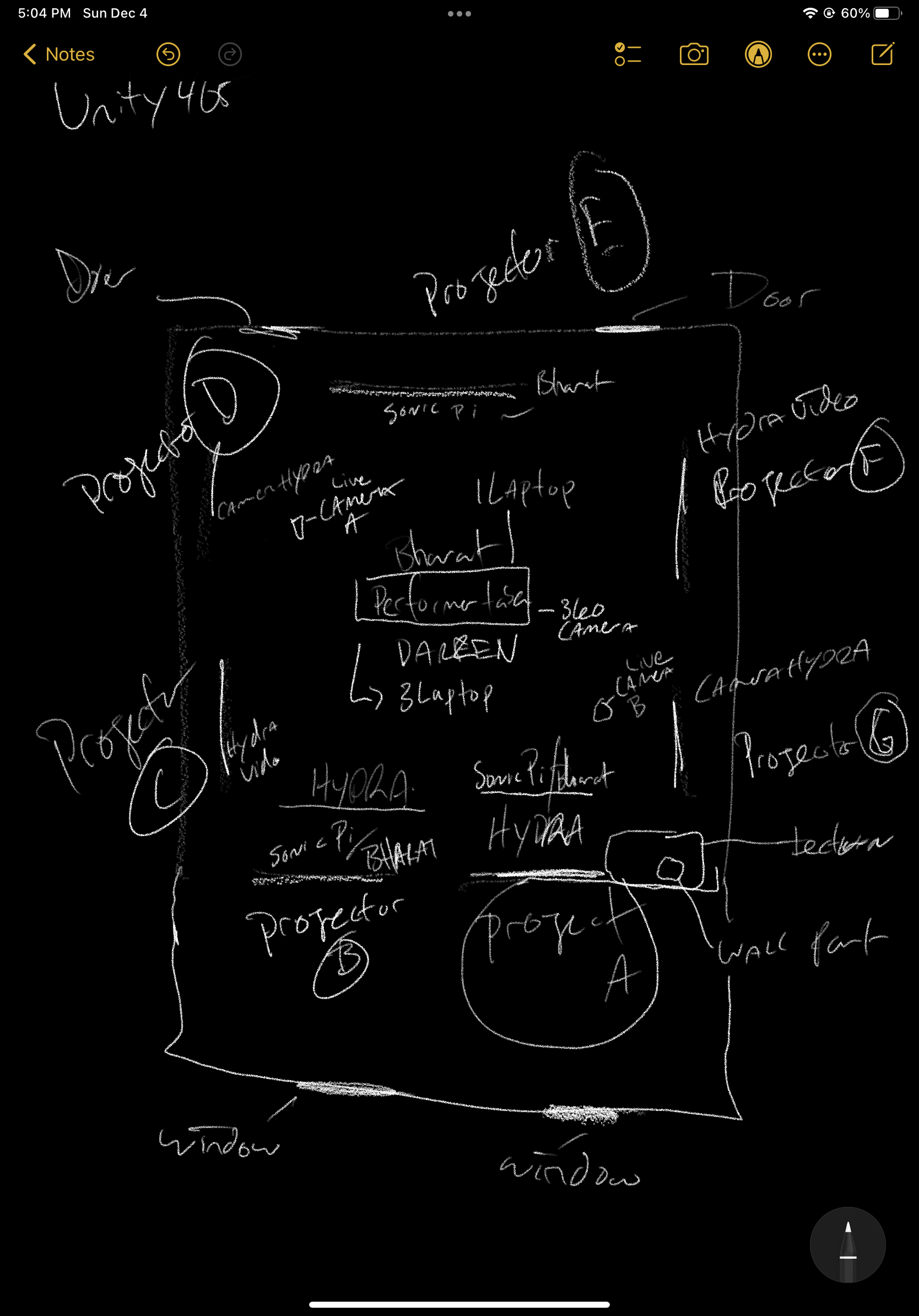

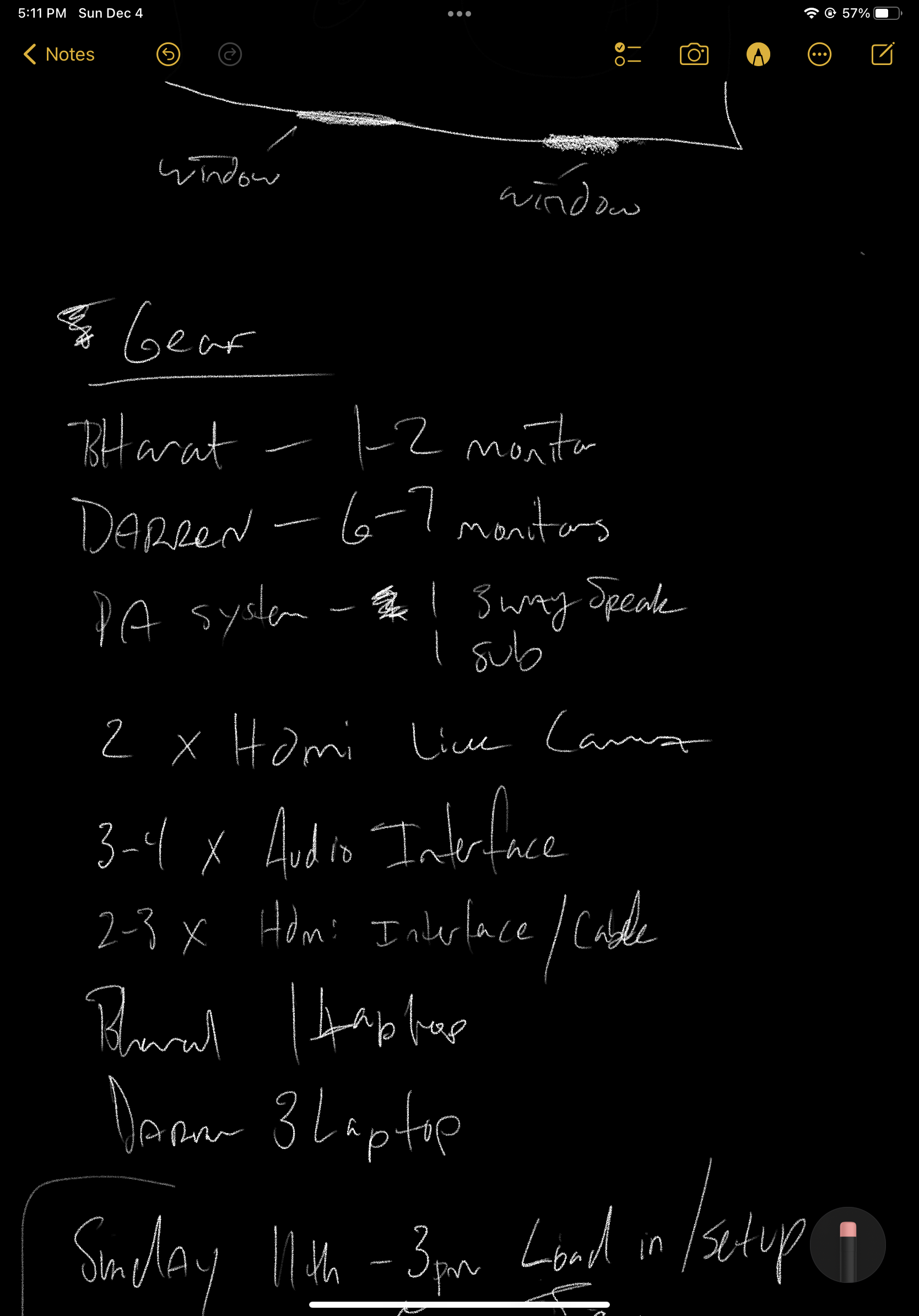

Setup and configuration of the room and mapping the projectors to the different laptops and laying out the camera locations and how we will perform on Sunday the 11th at 7:30pm in Unity 405.

The run of the day is below along side a test recording of the screens running in the space. Next we will install a pA system, microphones, cameras, 360 camera and cover the doors to help block the light and finalize the base codes we will be performing with and prep all the equipment and obtain the material or fabric to over the doors.

All invite people to see the performance while we are documenting it on Sunday the 11th in Unity 405 @ 7:30pm

Week 5

Kondor Blue HDMI IN to USB-C and the Powel Pixel lights arrived, this week programming and texting the lights, a test run of how to connect to the projector in Unity. 405 and adding more code to the and practicing live coding for the final performance.

Live coding practice session is scheduled for this week sunday and then we can pick the final practice day after this session.

Below are examples of the single input source usb-c inputs with two cameras.

High Power Pixel RGB LED MODULE PCB

Live Capture Card Hd Video and Audio (hdmi in to usb-c)

Week Four

Marching.js practice session for final production.

This week we also were able to order the three Kondor Blue HDMI-USBC adapters for the camera feeds, and LED Neopixel lights that will fun with the arduino, this should be here by next week so we can finish building the final user interaction. We ordered two sets of LED NanoPixel lights, one is a single LED and the other is a strip. The single LED lights can be connected together in a strip and we have 8 of those.

The next step will be getting the LEDS to turn on and program the colors from there we can add a sensor so viewers will have a way to interact with these lights and last we will stage the lights in a practice space.

I also think using two Arduinos could also make for some better interactions and give us to the ability to use both sets of LEDS.

Week Three

Final Performance Marching.js work session

Spent some time using marching.js to obtain better control of moving and using objects, applying textures, and overall understanding of the language. Still finding it difficult to add more than 1-3 objects in a space, often the shapes or geometries are being lost in the process. Audio Reactions are working a lot better I am able to get more objects moving in response to sound faster.

I spoke with ATC and they said they have screens that we can use as well as projectors we just need to let them know the date and the location and we can email them for assistance with this process.

Next steps are figuring out how to work with the ardruino to control the ambient lights and figuring out what the visuals should be and seeing if it was possible to call the different cameras from inside of the editor or will we use a video switcher to switch from camera to camera while still adding effects and feedback loops via hydra.

One of the camera should be pointed at the audience as this will add to the immersion of the viewer in the space as they are also being incorporated into the visual.

Control the video. Remotely.

Low voltage. 12v power supply. LED….

Week Two

So far this week I have spent some time finishing the test run of the multi cam rig with two cameras and the third feed coming from the computer and going into the switcher back into the computer which immediately creates feedback. But, I did not have a third camera with hdmi out available at the moment. The switching between cameras went really well, and I was able to access the switcher from using the initcam funtion and just calling the (5") option for the camera, where as my webcam is option 1. As soon as you run the line of code it switches to that camera option which is really nice to not have to rely on a webcam for our front of house camera.

Multi Camera Test using a hdmi switcher and hydra.

Next, I will spend time finishing the install of Three.Js, which I think might need to happen in vscode and I also will see if the generic shader codes I have located on the web can work with the Kode software. I will also see if I can possibly get Veda to work with vscode. After this I will go back and make another test performance with hydra using the front of house camera rig and also develop more shader codes for marching.js

*****Update*******

After listening to Haru Ji speak in Charlie’s class, I am thinking that maybe one of the cameras that is hooked up to the switcher should actually be looking at the audience which would all for the viewer to possible feel more immersed in the work as they are no longer just looking at the performer and visuals or listening to sound, but they are also combined with this imagery and are influencing the experience.

Week One

Installed Kode and working on installing Three.Js which will be the way the visuals will be controlled on the third screen. The front screen will be Hydra plus multi camera rig, the second screen will be Marching.js with audio reactive visuals and I would like to experiment with a third shader.

Currently, Kode from Hexler.net does not share documentation on how to write to the shader, so I am hoping some of the leads I have found about shader code is universal across the board.

Lastly, I looked into a few tutorials on how to create some motion controlled lights using ardruino, so hoping to start building a test model of controllable light by next week.

Front of House Test Recording, video input + audio reactive. Visuals work for the front of house camera that will be fed the camera feed of the performer, for this feedback loop, I just used a video input which can be swapped for a camera source.

This utilizing the fft and working with the webcam I was able to apply effects to the webcam, I will add cine camera to the web input and see what is possible with better optics. Also, it might be possible to have a switcher to move around to different cameras in the space and have the same effects applied to the images. I also wonder if it is possible to code while filming.

One of the side walls will use marching.js and I am still undecided on the third projection but this is a test using marching.js with audio reactive

Next, I would like to text and confirm that we can connect multiple cameras to the video switcher and then access the video switcher video the .initcam function. I am also hoping that the video effects will work seamlessly across the different camera angles. After this is confirmed I will finish mocking up the visuals for the third screen using the additional shader language.

I also need to do some location scouting this week and I would like to run the idea of having “aerial codes” or “movements in the sky” be the title of the visual work, lately I have been looking at the sky a lot and all the different colors and even though the work will be released in winter I still think the vibe will be nice for making digital effects.

Lastly, I am curious if there is a more aggressive way to control the speed of the image as I have noticed that many of the still images look really good but they go by so fast.

3 Channel Live Coding Performance Starring The Virtual Shaman

For this project I will be performing with Bharat as he will primarily be constructing the audio using Gibber.cc

I plan to create a room scale performance space for presenting our performance but also as a space for recording the performance. As I am in charge of visuals I will be using a mix of Hydra, Veda, Three.js, Matter.js alongside interactive hardware such as Arduino, OSC, TouchDesigner.

During the performance we will install speakers, lights, projectors, and cameras that will hopefully work across three different walls in the space with Bharat in the middle. The goals being that two of the walls could be FFT responsive and the front wall can be a feedback loop that is working with a camera. We would also like to provide live interactions with the light which can be done through osc possibly.

This performance will be a way for us to explore a more professional or exhibition style presentation of the work, as we strive to move away from a documentary look of how the work is experienced by those not present for the actual performance. This can also be expanded upon with 360/Camera allowing for the audience to use a vr headset if they wish to view the performance in a different way.

Schedule

Week 1:

Testing of software, languages, and different platforms to see what the final mix of how we will work together.

This will also includes research around different methods and combining quick test examples to what works best for collaboration

Week 2:

Test coding seshing with sonic pi / gibber for song creation

Working with arduino and lights to start building connectivity

Scout locations for performance space.

Secure projectors

Test coding sessions with marching.js / hydra+camera / ?? (there will be a third platform from week 1)

Week 3:

Testing and development 2nd phase

Week 4:

Testing and development 2nd phase

Week 5:

Finalize basic structure of song and theme so visuals can be aligned better with the music

Week 6:

Test performance with controlled light and audio reactive feedback

Final staging of the space

Plan install

Week 7:

Final draft performance and recording of test performance

Make sure everything works

Potential Room Layout